Every time someone searches on Google, Bing, or another search engine, a highly curated list of results appears – commonly referred to as the Search Engine Results Page, or SERP. These pages are rich with insight: they reveal what content ranks highest, what ads are being served, how local results differ, and what users are clicking on. For businesses, marketers, and data analysts, SERP data offers a powerful lens into search trends, competitor strategies, and consumer intent.

But getting access to this data at scale isn’t easy. Search engines personalize results, block automated requests, and constantly update their algorithms. That’s why organizations are turning to SERP scraping tools to automate data collection and maintain a clear view of this ever-shifting landscape.

In this article, we’ll explore what SERP data can reveal, the challenges of collecting it, and how tools like Infatica’s SERP scraper make it easier to gather accurate, timely insights.

What Can You Learn from SERP Data?

SERP data is far more than just a list of ranked URLs – it’s a real-time snapshot of how search engines perceive online content, ads, and relevance. Analyzing this data unlocks critical insights across industries and functions. Here are some of the most valuable things you can learn:

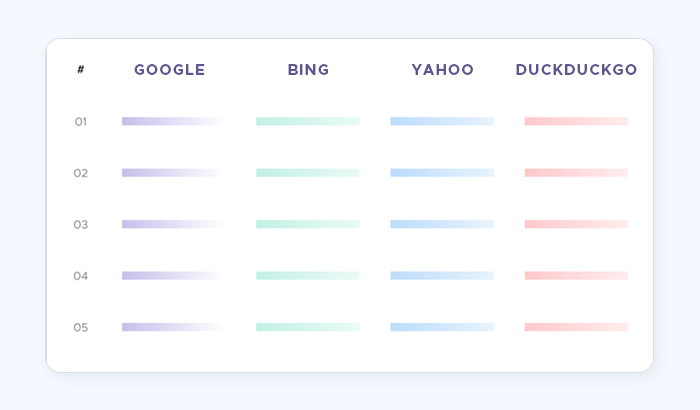

Keyword Rankings and SEO Performance

By tracking how your website or your competitors rank for specific keywords over time, you can measure the effectiveness of your SEO strategy. SERP data reveals which pages are gaining visibility, where you're losing ground, and what search features (like featured snippets or "People also ask") are appearing.

Competitor Intelligence

SERP data shows who dominates your target keywords and how they structure their content. You can analyze title tags, meta descriptions, and domain authority to understand what’s working for others – and how to adapt your strategy accordingly.

Market Demand and Content Opportunities

Trends in search queries and featured content provide clues about what your audience is interested in. By spotting gaps in the SERP – where few relevant results exist – you can identify opportunities to create content that meets real demand.

Paid Search and Ad Monitoring

If you’re running Google Ads or managing paid campaigns, SERP data helps you see who else is bidding on your keywords, how often ads appear, and how competitive the space is. Monitoring ad creatives and placements over time also supports A/B testing and brand positioning.

Local and Mobile Insights

With search results varying based on location and device type, SERP data enables businesses – especially those with physical locations – to assess how well they perform in specific regions or cities, and across mobile vs. desktop experiences.

The Challenges of Collecting SERP Data

While the insights from SERP data are incredibly valuable, extracting that data at scale is no simple task. Search engines are designed to serve users – not scrapers – which means they actively work to prevent automated access. Here are the key challenges businesses face when collecting SERP data:

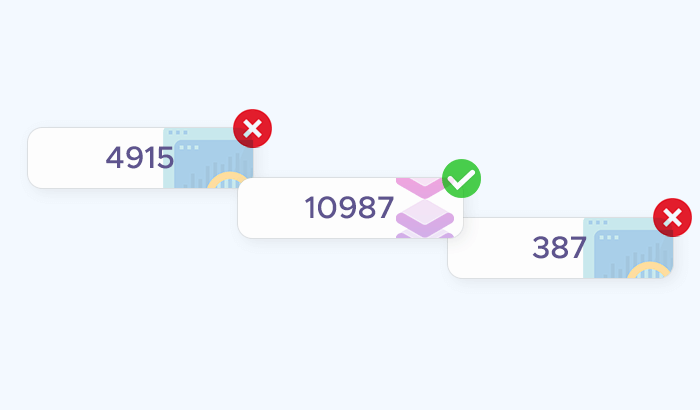

Anti-Bot Measures

Search engines deploy sophisticated bot detection systems that can identify and block automated traffic. Techniques like rate limiting, IP blacklisting, and CAPTCHA challenges can quickly halt scraping efforts if not handled properly.

Geolocation and Personalization

Search results can vary widely based on a user's location, language, browsing history, and device type. To gather truly representative SERP data, scrapers need to simulate different user environments – often requiring proxy rotation, geotargeting, and user-agent spoofing.

Dynamic Content and SERP Features

Modern SERPs include more than blue links. Featured snippets, image carousels, local packs, and “People also ask” boxes are rendered dynamically and vary across queries. Capturing this structured and unstructured data accurately requires flexible, resilient scraping logic.

High Volume and Frequency Requirements

To monitor keyword rankings, ad placements, or competitor performance effectively, data must be collected at a high frequency across thousands – or even millions – of queries. This demands a scraping infrastructure that can scale without breaking or violating service terms.

Legal and Ethical Boundaries

Scraping public data is legal in many jurisdictions, but it’s important to respect terms of service, robots.txt files, and applicable data privacy regulations. Working with a compliant solution helps reduce risk and maintain operational integrity.

Comparing Approaches to SERP Data Extraction

| Approach | Pros | Cons | Best For |

|---|---|---|---|

| Manual Collection | Simple to start; no technical setup needed | Time-consuming, low scalability, prone to human error | One-time audits, small-scale tasks |

| Open-Source Libraries | Customizable, free to use | Requires development time; breaks easily due to SERP changes | Developers with time & expertise |

| In-House Scraper | Full control over data and logic | High maintenance, IP rotation needed, anti-bot mitigation | Large teams with scraping capacity |

| SERP APIs | Fast, scalable, handles anti-bot defenses | Cost varies with volume; limited flexibility | Teams needing reliable data quickly |

| Commercial Scrapers | All-in-one solution, often includes proxies & parsing | Subscription cost; vendor lock-in risk | Businesses seeking ease and scale |

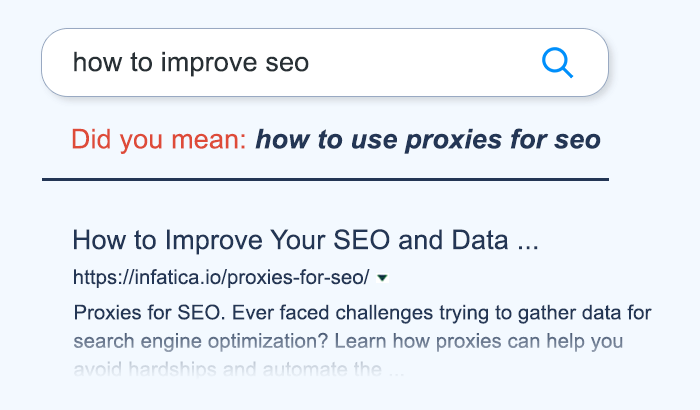

How Infatica’s SERP Scraper Helps

For businesses and data teams looking to extract SERP data at scale, Infatica’s SERP scraper provides a robust, flexible, and developer-friendly solution. It’s built to overcome the most common challenges of SERP data collection – while streamlining performance and minimizing maintenance. Here’s what sets it apart:

Managed Proxy Network

Infatica’s scraper comes backed by a globally managed proxy infrastructure. You don’t have to worry about sourcing or rotating IPs – our system does it for you, ensuring consistent access to search engines without interruptions.

Avoid CAPTCHAs and IP Bans

Thanks to intelligent proxy rotation and the use of real desktop and mobile device fingerprints, our tool significantly reduces the likelihood of encountering CAPTCHAs or bans. This means more successful requests and less time spent troubleshooting blocked sessions.

JavaScript Rendering

Need to extract content from SERP features that rely on JavaScript? No problem. Infatica’s scraper supports full JavaScript rendering, allowing you to collect even dynamically generated elements like “People also ask” boxes, maps, and carousels.

Custom Headers and Sessions

Mimic any browser or device by customizing request headers. Maintain session persistence with cookies and keep the context of multi-step interactions – ideal for tracking changes or following pagination.

Bulk Scraping

Efficiency is built-in: You can send up to 1,000 URLs per request, dramatically reducing the time and resources needed for large-scale data collection.

Global Coverage with 190+ Geolocations

Need SERP data from São Paulo, Paris, or Tokyo? Infatica supports IP geotargeting across 190+ locations in North America, South America, Europe, and Asia – so you can analyze localized search results with precision.

Flexible Output Formats

Whether you're processing data manually or feeding it into a larger analytics pipeline, you can export results in CSV, XLSX, or JSON formats for maximum compatibility.

Developer-Ready Documentation

Infatica’s extensive documentation includes both sync and async API examples, Python and JavaScript code snippets, and detailed guidance on authentication, error handling, and pagination.

24/7 Support

Have a question? Hit a snag? Our expert support team is available around the clock to help you solve issues and optimize your workflow.