In today’s data-driven world, organizations rely on fast, accurate, and continuous information to make informed decisions. Data pipelines play a critical role in transforming raw data into actionable insights, automating collection, processing, and delivery. Leveraging tools like web scraping APIs can simplify the ingestion of structured, up-to-date data, ensuring your pipelines start on a reliable foundation.

What Is a Data Pipeline?

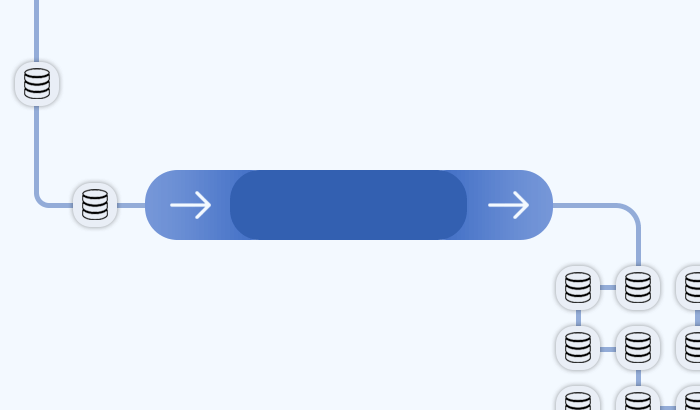

A data pipeline is an automated system that moves data from one point to another – from its source to a storage or analytics destination – while ensuring it’s properly collected, transformed, and ready for use. Instead of manually exporting files or running scripts, data pipelines continuously process and deliver information, making it accessible for analysis, reporting, or integration with other systems.

In a typical setup, a data pipeline performs three main functions:

- Data ingestion – Collecting data from various sources such as databases, APIs, or web pages.

- Data processing – Cleaning, validating, and transforming the data into a standardized format.

- Data storage and delivery – Sending the refined data to a warehouse, data lake, or analytics tool.

For example, a company tracking e-commerce trends might use a web scraping API to gather product data across online stores. This data then flows through the pipeline – being cleaned, normalized, and stored – before powering dashboards that reveal pricing trends or stock changes in real time.

Key Components of a Data Pipeline

A data pipeline is made up of several interconnected components, each responsible for a specific stage in the data’s journey – from raw input to meaningful output:

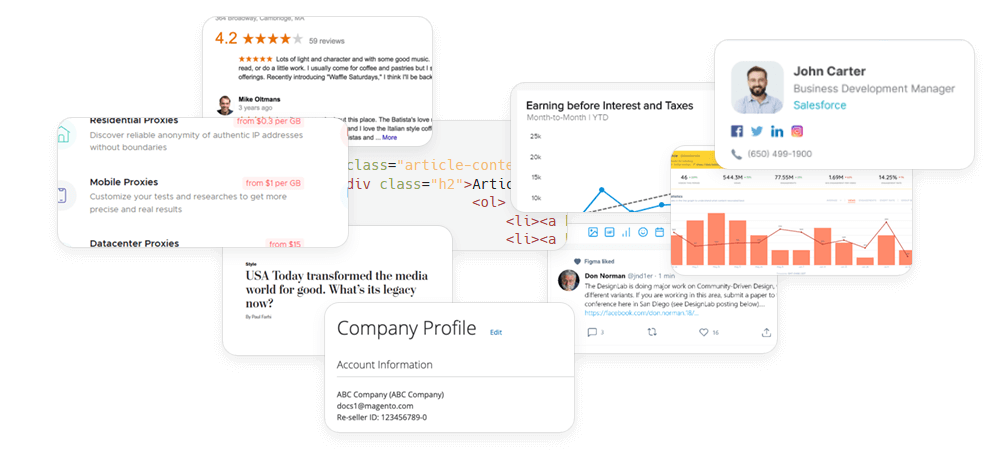

Data Sources

The journey begins with data collection. Sources can include internal systems, third-party databases, APIs, and web data. For businesses that rely on public web information – such as pricing, product availability, or user reviews – using a web scraping API ensures structured, up-to-date data without the complexity of building custom crawlers.

Ingestion Layer

This layer gathers data from multiple sources and delivers it to the processing environment. Data ingestion can occur in batch mode (periodically) or real-time (continuously). APIs, message queues, and ETL tools are commonly used here to automate and scale the collection process.

Processing Layer

Once data is ingested, it must be cleaned, normalized, and transformed. This stage removes duplicates, fills missing values, and standardizes formats so the information is ready for analysis. Stream processing tools and data transformation frameworks handle this step efficiently.

Storage Layer

After transformation, the processed data is stored in a repository such as a data warehouse, data lake, or cloud storage system. This ensures accessibility, durability, and compatibility with analytics platforms.

Analytics and Visualization Layer

The final stage turns refined data into insights. Business intelligence tools, dashboards, and data visualization platforms allow stakeholders to explore trends, track KPIs, and make data-driven decisions.

Types of Data Pipelines

Data pipelines come in several forms, depending on how they process, move, and transform data.

| Type | Description | When to Use | Example Use Case |

|---|---|---|---|

| Batch Pipelines | Process data in groups at scheduled intervals (e.g., hourly, daily). | When real-time updates aren’t critical and efficiency is prioritized. | Aggregating website traffic logs at the end of each day. |

| Real-Time Pipelines | Continuously process and deliver data as it’s generated. | When immediate insights are required, such as monitoring or alerting systems. | Tracking live product price changes from multiple e-commerce platforms. |

| ETL (Extract, Transform, Load) | Extracts data, transforms it before loading into storage. Ensures clean, structured data before it’s stored. | When data quality and consistency are top priorities. | Cleaning and formatting scraped data before storing it in a warehouse. |

| ELT (Extract, Load, Transform) | Loads raw data first, then transforms it within the destination system. | When using scalable cloud storage or modern data warehouses. | Loading large volumes of raw web data into BigQuery for flexible analysis. |

| Cloud-Based Pipelines | Hosted and managed in the cloud for scalability and automation. | When scalability, maintenance, and cost-efficiency are important. | Using cloud functions to automate data flow from APIs to a data lake. |

| On-Premises Pipelines | Operate within local infrastructure under full organizational control. | When data security or compliance requires local hosting. | Processing sensitive financial records within a private network. |

How to Solve Common Challenges in Data Pipelines

Even the most well-designed data pipelines face operational and architectural challenges. As data volumes grow and new sources are added, maintaining consistency, scalability, and accuracy becomes increasingly complex.

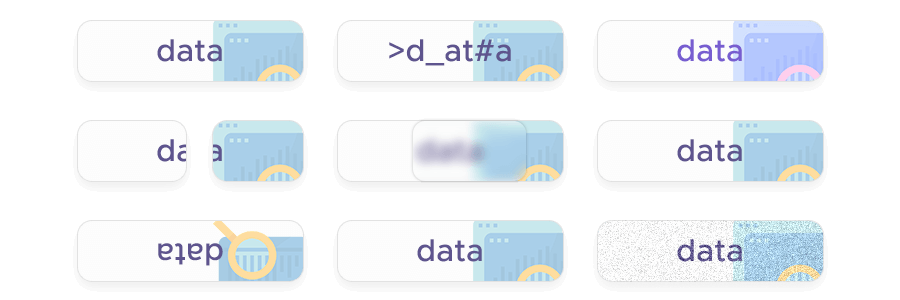

Data Quality Issues

Inconsistent, incomplete, or duplicated data can distort analytics and decision-making. These issues often arise from unreliable or unstructured sources.

Solution: Implement validation and cleaning steps within your pipeline. Start with dependable collection methods – such as structured web scraping APIs – to ensure high-quality input from the beginning.

Scalability Limitations

As data sources multiply, pipelines must handle larger volumes and faster processing rates.

Solution: Design for scalability from the start. Use cloud-native tools, distributed processing frameworks, and automated orchestration systems to accommodate growing workloads seamlessly.

Integration Complexity

Combining data from different systems, formats, and APIs can cause compatibility and synchronization issues.

Solution: Standardize data formats and employ middleware or transformation tools that unify multiple sources into a consistent structure.

Monitoring and Maintenance

Pipelines are dynamic systems – a single API change or data format update can cause failures.

Solution: Set up monitoring, alerting, and logging tools to detect anomalies early. Automate maintenance routines wherever possible to minimize downtime and manual intervention.

Compliance and Security

Data pipelines must meet legal and organizational standards for privacy, access control, and data storage.

Solution: Ensure compliance with regulations such as GDPR or CCPA. Encrypt sensitive data in transit and at rest, and restrict access using role-based permissions.

How to Build a Robust Data Pipeline

Designing an effective data pipeline requires careful planning, the right tools, and a focus on scalability and automation. Here’s a step-by-step approach to building a reliable and efficient data pipeline:

1. Define Your Data Goals

Start by identifying what business questions you want to answer or what processes you want to improve. Clear objectives will help determine which data to collect, how often, and in what format.

2. Choose Reliable Data Sources

Select sources that provide accurate, relevant, and up-to-date information. For external data, APIs and web scraping solutions can automate collection at scale. Using a web scraping API helps ensure consistent, structured input that’s easy to integrate into downstream systems.

3. Design the Ingestion Process

Plan how data will enter the pipeline – through batch jobs, event streams, or continuous API calls. Use automated ingestion tools to handle multiple sources without manual intervention.

4. Clean and Transform the Data

Raw data is rarely ready for use. Apply transformation steps such as deduplication, validation, normalization, and enrichment. Standardizing formats early makes analysis faster and more accurate later.

5. Store Data Efficiently

Choose the right storage system based on your pipeline’s purpose – whether it’s a data warehouse for structured analytics, a data lake for raw data, or cloud storage for scalability and cost efficiency.