Large language models like ChatGPT and Gemini have transformed the way we interact with technology – but behind every intelligent output lies an immense foundation of training data. From news articles to product reviews, the quality and diversity of that data determine how well a model understands language, context, and nuance. In this article, we’ll explore how Infatica can help AI teams access the data they need.

What Are Large Language Models and How Do They Learn?

Large language models (LLMs) are a category of artificial intelligence systems designed to understand, generate, and manipulate human language. At the heart of these models is a neural network architecture known as the transformer, which enables them to process vast sequences of text and learn contextual relationships between words, phrases, and concepts.

LLMs are trained on enormous datasets that may include websites, books, academic papers, news articles, forums, and more. Through a process known as unsupervised learning, the model attempts to predict the next word in a sequence – an exercise repeated billions or even trillions of times. Over time, it learns not just grammar and syntax, but also style, factual knowledge, reasoning patterns, and even subtle nuances of meaning.

The scale and quality of data are critical to an LLM’s performance. A diverse and representative dataset allows a model to generalize across languages, domains, and cultures – enabling it to answer questions about quantum physics one moment and compose poetry the next. This is why leading AI labs often invest heavily in building and curating massive corpora of text.

But raw data alone isn’t enough. It must be cleaned, filtered for quality, and sometimes fine-tuned to meet specific goals – whether that’s generating safe outputs, reducing bias, or excelling in a particular industry domain. This is where thoughtful data collection strategies come into play – laying the groundwork for the model’s capabilities before the first line of code is even trained.

The Role of Data Collection in Training LLMs

The capabilities of a large language model are only as strong as the data it learns from. Data collection is a foundational step in building any LLM – determining not just how well the model performs, but also what it knows, how it communicates, and who it represents.

Diverse Data Fuels General Intelligence

To generate intelligent and context-aware responses, an LLM needs exposure to a wide variety of content. This includes:

- Web content: blogs, news sites, forums, and Wikipedia offer natural, human-written language in many styles and topics.

- Books and academic papers: these contribute depth, structure, and formal knowledge.

- Conversational data: transcripts from discussions, help desks, or social platforms help models learn how people talk and interact.

- Code repositories: for models that handle programming tasks, platforms like GitHub provide valuable learning material.

A truly capable model must be trained on data that spans languages, dialects, cultures, industries, and media types. This breadth helps ensure that the model doesn’t just reflect one perspective or region but can generalize across many.

The Importance of Fresh, High-Quality Data

LLMs are often trained on snapshots of the internet – frozen in time. But information evolves. To stay relevant, models must have access to up-to-date data that reflects current events, social trends, and new developments in language and knowledge.

Equally important is data quality. Models trained on unfiltered or poorly selected datasets risk learning and reproducing misinformation, bias, or low-value patterns. That’s why data curation – identifying, cleaning, and organizing content – is as critical as the scraping or sourcing itself.

Ethical and Legal Considerations

As AI development accelerates, questions of data ethics have come into sharper focus. Not all publicly accessible content is fair game, and responsible data collection must take into account:

- Intellectual property rights

- Data licensing and terms of service

- Privacy and personally identifiable information (PII)

Leading AI developers are increasingly investing in transparent, documented, and compliant data sourcing practices – both to meet legal standards and to build trustworthy AI systems.

Web Scraping as a Key Enabler of Dataset Creation

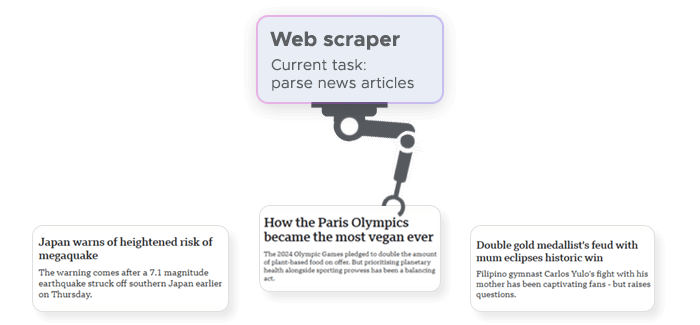

To train a powerful large language model, developers need access to vast and varied textual data – often far beyond what’s available in pre-packaged datasets. This is where web scraping becomes an essential tool.

Web scraping is the automated process of extracting information from websites. When used responsibly, it enables organizations to collect fresh, diverse, and high-volume data across domains, languages, and geographies – exactly the kind of material that fuels effective language models.

Why Scraping Matters for LLM Training

- Scale: Manually curating millions or billions of data points is impossible. Scraping automates the discovery and collection of relevant content at scale.

- Diversity: Scraping allows access to content across industries, regions, and writing styles – from product reviews in Japan to legal documents in the U.S.

- Freshness: The web is constantly evolving. Scraping helps teams maintain up-to-date datasets that reflect current events, terminology, and usage patterns.

- Customization: Need data from a specific niche – like technical forums, scientific publications, or job boards? Scraping can target those sources precisely.

Whether sourcing multilingual content for a globally capable model or gathering domain-specific data for a fine-tuned LLM, web scraping enables highly adaptable data collection pipelines.

Common Challenges in Scraping for LLMs

While web scraping is a powerful enabler of large-scale data collection, it’s also a technically complex and resource-intensive process – especially when it comes to gathering training data for large language models. From accessing content to ensuring its quality, scraping at this scale presents several key challenges.

1. Anti-Bot Protection and Access Restrictions

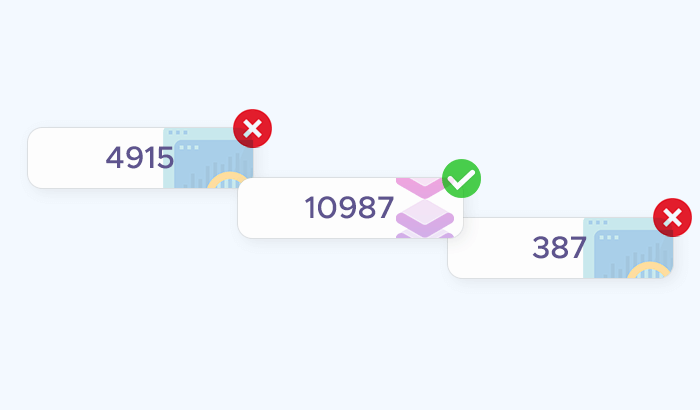

Many websites deploy advanced mechanisms to detect and block automated scraping. Overcoming these barriers often requires rotating IP addresses, managing headless browsers, and using residential or datacenter proxy networks – all while respecting ethical scraping guidelines and avoiding disruption to the target sites:

- CAPTCHAs and JavaScript-based challenges

- Rate limiting and IP bans

- Geo-restrictions, which limit access to content based on user location

2. Handling Dynamic and Diverse Web Structures

Today’s web content is increasingly dynamic. This diversity makes it difficult to create one-size-fits-all scrapers. Instead, scalable scraping efforts need robust infrastructure, frequent script adjustments, and automation workflows to monitor for structural changes. Pages may:

- Load content asynchronously via JavaScript

- Use infinite scrolling or popups

- Vary structurally even within the same site

3. Geolocation and Multilingual Content

Language models benefit from data that reflects the linguistic and cultural diversity of the web. To access localized content – such as reviews, news, or forums in different countries – scraping efforts must simulate users in specific locations. This often requires:

- Geolocated proxies

- Multilingual parsing and character encoding support

- Locale-aware filtering and labeling

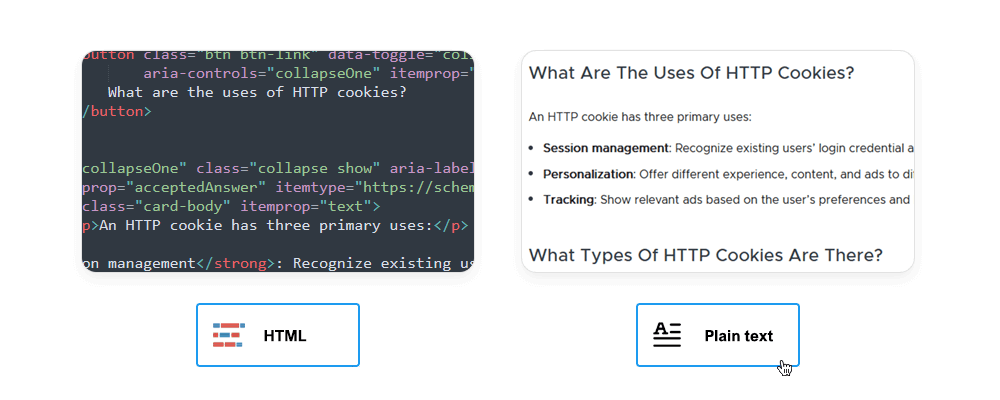

4. Data Cleaning and Deduplication

Raw scraped data is rarely ready for model training. To be useful, this data must undergo cleaning, deduplication, formatting, and enrichment – all of which add significant complexity to the pipeline. It often includes:

- Irrelevant content (e.g., navigation menus, ads, or boilerplate text)

- Duplicate pages or near-identical versions across domains

- Spam, profanity, or low-quality material

How Infatica Helps Collect Training-Ready Data

Building large language models demands data that is diverse, dynamic, and ethically sourced – and gathering that data at scale requires far more than just web scraping scripts. At Infatica, we provide the infrastructure, tools, and expertise that power high-quality data collection for AI development.

Robust Scraping Infrastructure

Infatica’s global proxy network – including residential, mobile, and datacenter proxies – enables teams to bypass geo-restrictions and access localized content from virtually anywhere in the world. Whether you need reviews from German e-commerce sites, product descriptions from Japan, or forum data from Latin America, our network helps you reach it.

- Geolocation targeting: Collect region-specific data for multilingual training

- IP rotation and management: Reduce blocks, bans, and throttling

- High-speed access: Maintain scraping efficiency, even at scale

Scraping-as-a-Service: Custom Solutions for Complex Needs

For teams without the internal bandwidth or infrastructure to manage large-scale scraping, Infatica offers scraping-as-a-service – a tailored solution for collecting, cleaning, and delivering structured datasets from the web. Whether you're gathering academic content, tech forum discussions, or product metadata, we adapt the pipeline to your goals – we handle everything from:

- Navigating anti-bot systems and dynamic content

- Structuring and formatting the data

- Ongoing maintenance to adapt to website changes

Ready-to-Use Datasets

For those looking to accelerate their projects, Infatica also offers pre-collected and custom-built datasets. These can include:

- E-commerce data: product descriptions, reviews, prices

- News and media: articles from global publishers

- Public discussions: forums, social comments, Q&A sites

- Industry-specific content: finance, travel, health, and more

We ensure all datasets are ethically collected and filtered for quality, with metadata and labeling available on request.

Compliant and Responsible Data Collection

We understand that building trust in AI also means building trust in the data that powers it. That’s why Infatica adheres to responsible scraping practices, with a strong focus on:

- Compliance with website terms and data privacy standards

- Respect for robots.txt and ethical collection policies

- Transparent delivery pipelines